publications

2025

-

Towards a Linear-Ramp QAOA protocol: Evidence of a scaling advantage in solving some combinatorial optimization problemsJ. A. Montanez-Barrera, and Kristel Michielsennpj Quantum Information, 2025

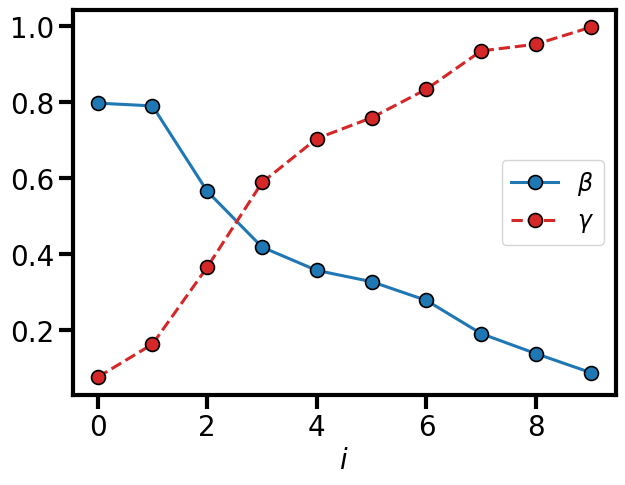

Towards a Linear-Ramp QAOA protocol: Evidence of a scaling advantage in solving some combinatorial optimization problemsJ. A. Montanez-Barrera, and Kristel Michielsennpj Quantum Information, 2025The Quantum Approximate Optimization Algorithm (QAOA) is a promising algorithm for solving combinatorial optimization problems (COPs), with performance governed by variational parameters. While most prior work has focused on classically optimizing these parameters, we demonstrate that fixed linear ramp schedules, linear ramp QAOA (LR-QAOA), can efficiently approximate optimal solutions across diverse COPs. Simulations with up to 42 qubits and 400 layers suggest that the success probability scales with a coefficient that decreases as the number of layers increases. Comparisons with classical algorithms, including simulated annealing, Tabu Search, and branch-and-bound, show a scaling advantage for LR-QAOA. We show results of LR-QAOA on multiple QPUs (IonQ, Quantinuum, IBM) with up to 109 qubits and 100 layers, and circuits requiring 21,200 CNOT gates. Finally, we present a noise model based on two-qubit gate counts that accurately reproduces the experimental behavior of LR-QAOA.

-

Transfer learning of optimal QAOA parameters in combinatorial optimizationJ. A. Montanez-Barrera, Dennis Willsch, and Kristel MichielsenQuantum Information Processing, 2025

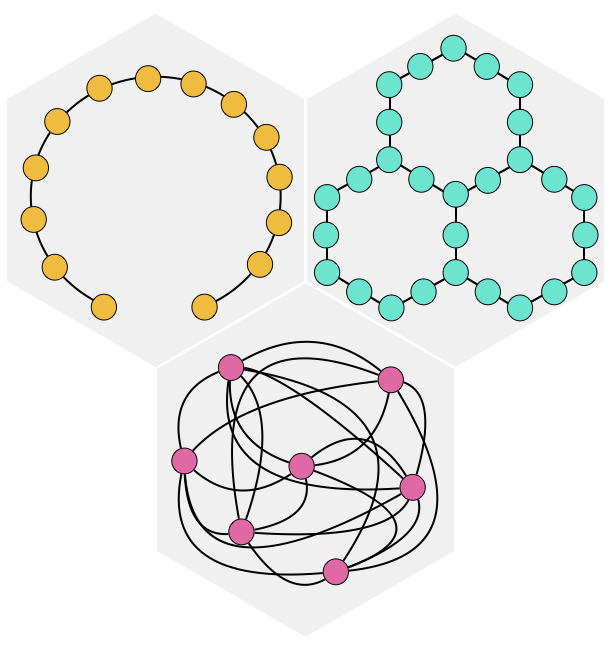

Transfer learning of optimal QAOA parameters in combinatorial optimizationJ. A. Montanez-Barrera, Dennis Willsch, and Kristel MichielsenQuantum Information Processing, 2025Solving combinatorial optimization problems (COPs) is a promising application of quantum computation, with the Quantum Approximate Optimization Algorithm (QAOA) being one of the most studied quantum algorithms for solving them. However, multiple factors make the parameter search of the QAOA a hard optimization problem. In this work, we study transfer learning (TL), a methodology to reuse pre-trained QAOA parameters of one problem instance into different COP instances. This methodology can be used to alleviate the necessity of classical optimization to find good parameters for individual problems. To this end, we select small cases of the traveling salesman problem (TSP), the bin packing problem (BPP), the knapsack problem (KP), the weighted maximum cut (MaxCut) problem, the maximal independent set (MIS) problem, and portfolio optimization (PO), and find optimal parameters for p layers. We compare how well the parameters found for one problem adapt to the others. Among the different problems, BPP is the one that produces the best transferable parameters, maintaining the probability of finding the optimal solution above a quadratic speedup over random guessing for problem sizes up to 42 qubits and p = 10 layers. Using the BPP parameters, we perform experiments on IonQ Harmony and Aria, Rigetti Aspen-M-3, and IBM Brisbane of MIS instances for up to 18 qubits. The results indicate IonQ Aria yields the best overlap with the ideal probability distribution. Additionally, we show that cross-platform TL is possible using the D-Wave Advantage quantum annealer with the parameters found for BPP. We show an improvement in performance compared to the default protocols for MIS with up to 170 qubits. Our results suggest that there are QAOA parameters that generalize well for different COPs and annealing protocols.

-

Benchmarking neutral atom-based quantum processors at scaleAndrea B. Rava, Kristel Michielsen, and J. A. Montanez-Barrera2025

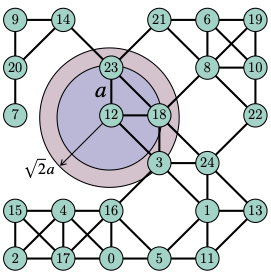

Benchmarking neutral atom-based quantum processors at scaleAndrea B. Rava, Kristel Michielsen, and J. A. Montanez-Barrera2025In recent years, neutral atom-based quantum computation has been established as a competing alternative for the realization of fault-tolerant quantum computation. However, as with other quantum technologies, various sources of noise limit their performance. With processors continuing to scale up, new techniques are needed to characterize and compare them in order to track their progress. In this work, we present two systematic benchmarks that evaluate these quantum processors at scale. We use the quantum adiabatic algorithm (QAA) and the quantum approximate optimization algorithm (QAOA) to solve maximal independent set (MIS) instances of random unit-disk graphs. These benchmarks are scalable, relying not on prior knowledge of the system’s evolution but on the quality of the MIS solutions obtained. We benchmark quera_aquila and pasqal_fresnel on problem sizes up to 102 and 85 qubits, respectively. Overall, quera_aquila performs better on QAOA and QAA instances. Finally, we generate MIS instances of up to 1000 qubits, providing scalable benchmarks for evaluating future, larger processors as they become available.

-

Evaluating the performance of quantum processing units at large width and depthJ. A. Montanez-Barrera, Kristel Michielsen, and David E. Bernal Neira2025Citations: 9

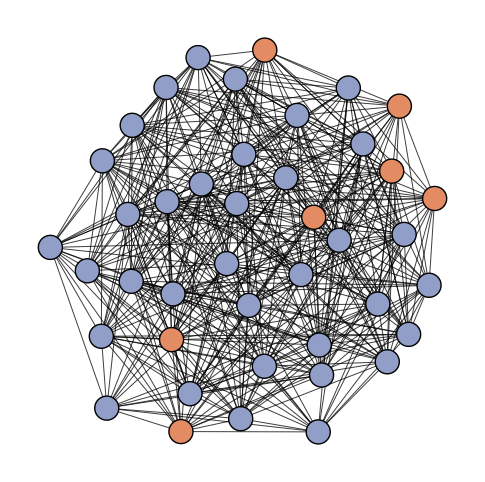

Evaluating the performance of quantum processing units at large width and depthJ. A. Montanez-Barrera, Kristel Michielsen, and David E. Bernal Neira2025Citations: 9Quantum computers have now surpassed classical simulation limits, yet noise continues to limit their practical utility. As the field shifts from proof-of-principle demonstrations to early deployments, there is no standard method for meaningfully and scalably comparing heterogeneous quantum hardware. Existing benchmarks typically focus on gate-level fidelity or constant-depth circuits, offering limited insight into algorithmic performance at depth. Here we introduce a benchmarking protocol based on the linear ramp quantum approximate optimization algorithm (LR-QAOA), a fixed-parameter, deterministic variant of QAOA. LR-QAOA quantifies a QPU’s ability to preserve a coherent signal as circuit depth increases, identifying when performance becomes statistically indistinguishable from random sampling. We apply this protocol to 24 quantum processors from six vendors, testing problems with up to 156 qubits and 10,000 layers across 1D-chains, native layouts, and fully connected topologies. This constitutes the most extensive cross-platform quantum benchmarking effort to date, with circuits reaching a million two-qubit gates. LR-QAOA offers a scalable, unified benchmark across platforms and architectures, making it a tool for tracking performance in quantum computing.

-

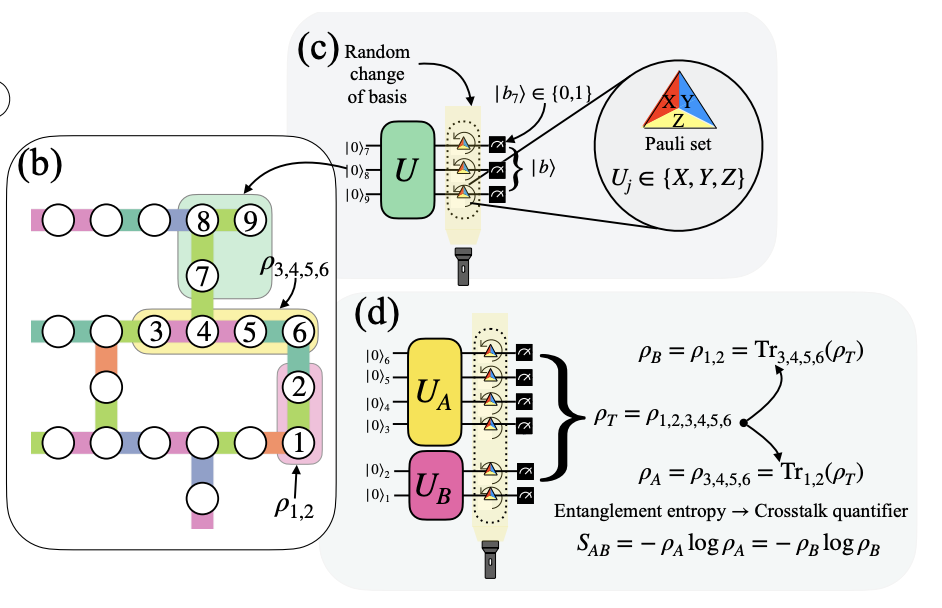

Diagnosing crosstalk in large-scale QPUs using zero-entropy classical shadowsJ. A. Montañez-Barrera, G. P. Beretta, Kristel Michielsen, and 1 more authorQuantum Science and Technology, 2025

Diagnosing crosstalk in large-scale QPUs using zero-entropy classical shadowsJ. A. Montañez-Barrera, G. P. Beretta, Kristel Michielsen, and 1 more authorQuantum Science and Technology, 2025As quantum processing units (QPUs) scale toward hundreds of qubits, diagnosing noise-induced correlations (crosstalk) becomes critical for reliable quantum computation. In this work, we introduce Zero-Entropy Classical Shadows (ZECS), a diagnostic tool that uses information of a rank-one quantum state tomography (QST) reconstruction from classical shadow (CS) information to make a crosstalk diagnosis. We use ZECS on trapped ion and superconductive QPUs, including ionq_forte (36 qubits), ibm_brisbane (127 qubits), and ibm_fez (156 qubits), using from 1,000 to 6,000 samples. With these samples, we use ZECS to characterize crosstalk among disjoint qubit subsets across the full hardware. This information is then used to select low-crosstalk qubit subsets on ibm_fez for executing the Quantum Approximate Optimization Algorithm (QAOA) on a 20-qubit problem. Compared to the best qubit selection via Qiskit transpilation, our method improves solution quality by 10% and increases algorithmic coherence by 33%. ZECS offers a scalable and measurement-efficient approach to diagnosing crosstalk in large-scale QPUs.

2024

-

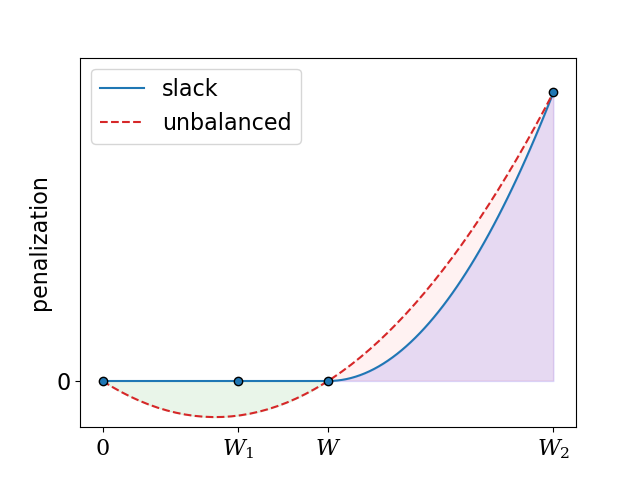

Unbalanced penalization: A new approach to encode inequality constraints of combinatorial problems for quantum optimization algorithmsAlejandro Montanez-Barrera, Dennis Willsch, Alberto Maldonado-Romo, and 1 more authorQuantum Science and Technology, 2024Citations: 55

Unbalanced penalization: A new approach to encode inequality constraints of combinatorial problems for quantum optimization algorithmsAlejandro Montanez-Barrera, Dennis Willsch, Alberto Maldonado-Romo, and 1 more authorQuantum Science and Technology, 2024Citations: 55Solving combinatorial optimization problems of the kind that can be codified by quadratic unconstrained binary optimization (QUBO) is a promising application of quantum computation. Some problems of this class suitable for practical applications such as the traveling salesman problem (TSP), the bin packing problem (BPP), or the knapsack problem (KP) have inequality constraints that require a particular cost function encoding. The common approach is the use of slack variables to represent the inequality constraints in the cost function. However, the use of slack variables considerably increases the number of qubits and operations required to solve these problems using quantum devices. In this work, we present an alternative method that does not require extra slack variables and consists of using an unbalanced penalization function to represent the inequality constraints in the QUBO. This function is characterized by larger penalization when the inequality constraint is not achieved than when it is. We evaluate our approach on the TSP, BPP, and KP, successfully encoding the optimal solution of the original optimization problem near the ground state cost Hamiltonian. Additionally, we employ D-Wave Advantage and D-Wave hybrid solvers to solve the BPP, surpassing the performance of the slack variables approach by achieving solutions for up to 29 items, whereas the slack variables approach only handles up to 11 items. This new approach can be used to solve combinatorial problems with inequality constraints with a reduced number of resources compared to the slack variables approach using quantum annealing or variational quantum algorithms.

2023

-

Improving Performance in Combinatorial Optimization Problems with Inequality Constraints: An Evaluation of the Unbalanced Penalization Method on D-Wave AdvantageAlejandro Montanez-Barrera, Pim Heuvel, Dennis Willsch, and 1 more author2023 IEEE International Conference on Quantum Computing and Engineering (QCE), 2023

Improving Performance in Combinatorial Optimization Problems with Inequality Constraints: An Evaluation of the Unbalanced Penalization Method on D-Wave AdvantageAlejandro Montanez-Barrera, Pim Heuvel, Dennis Willsch, and 1 more author2023 IEEE International Conference on Quantum Computing and Engineering (QCE), 2023Combinatorial optimization problems are one of the target applications of current quantum technology, mainly because of their industrial relevance, the difficulty of solving large instances of them classically, and their equivalence to Ising Hamiltonians using the quadratic unconstrained binary optimization (QUBO) formulation. Many of these applications have inequality constraints, usually encoded as penalization terms in the QUBO formulation using additional variables known as slack variables. The slack variables have two disadvantages: (i) these variables extend the search space of optimal and suboptimal solutions, and (ii) the variables add extra qubits and connections to the quantum algorithm. Recently, a new method known as unbalanced penalization has been presented to avoid using slack variables. This method offers a trade-off between additional slack variables to ensure that the optimal solution is given by the ground state of the Ising Hamiltonian, and using an unbalanced heuristic function to penalize the region where the inequality constraint is violated with the only certainty that the optimal solution will be in the vicinity of the ground state. This work tests the unbalanced penalization method using real quantum hardware on D-Wave Advantage for the traveling salesman problem (TSP). The results show that the unbalanced penalization method outperforms the solutions found using slack variables and sets a new record for the largest TSP solved with quantum technology.

2022

-

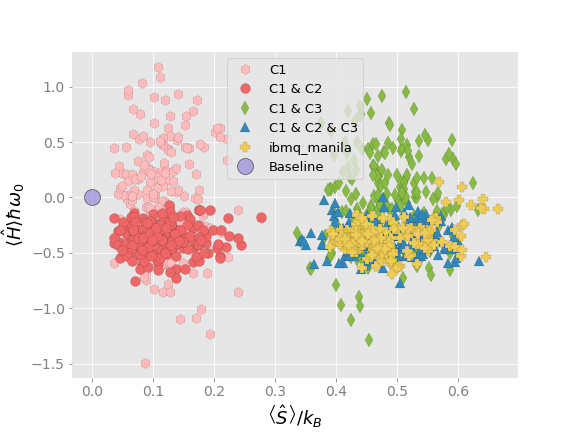

Method for generating randomly perturbed density operators subject to different sets of constraintsAlejandro Montanez-Barrera, R. T. Holladay, G. P. Beretta, and 1 more author2022

Method for generating randomly perturbed density operators subject to different sets of constraintsAlejandro Montanez-Barrera, R. T. Holladay, G. P. Beretta, and 1 more author2022This paper presents a general method for producing randomly perturbed density operators subject to different sets of constraints. The perturbed density operators are a specified “distance" away from the state described by the original density operator. This approach is applied to a bipartite system of qubits and used to examine the sensitivity of various entanglement measures on the perturbation magnitude. The constraint sets used include constant energy, constant entropy, and both constant energy and entropy. The method is then applied to produce perturbed random quantum states that correspond with those obtained experimentally for Bell states on the IBM quantum device ibmq_manila. The results show that the methodology can be used to simulate the outcome of real quantum devices where noise, which is important both in theory and simulation, is present.

-

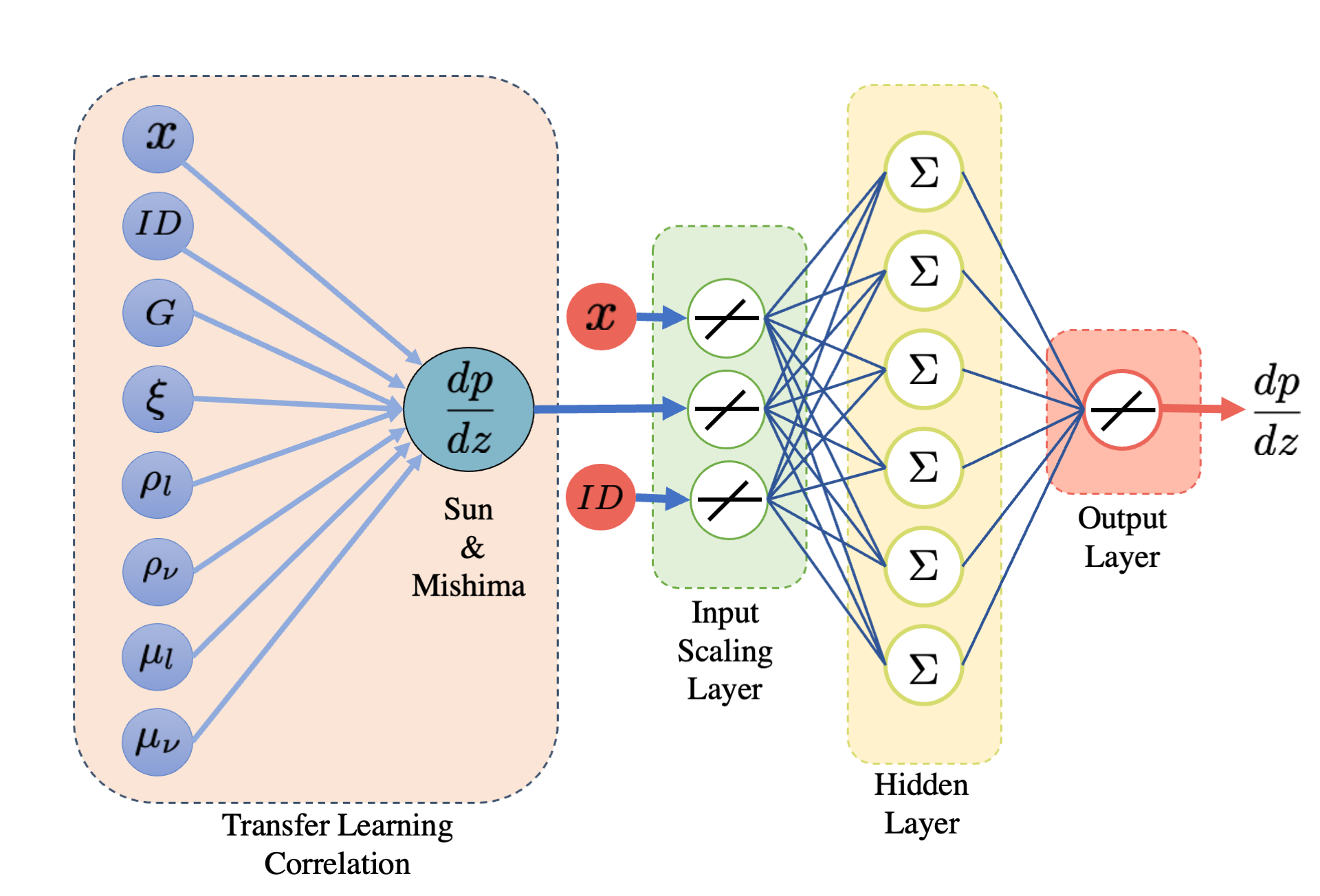

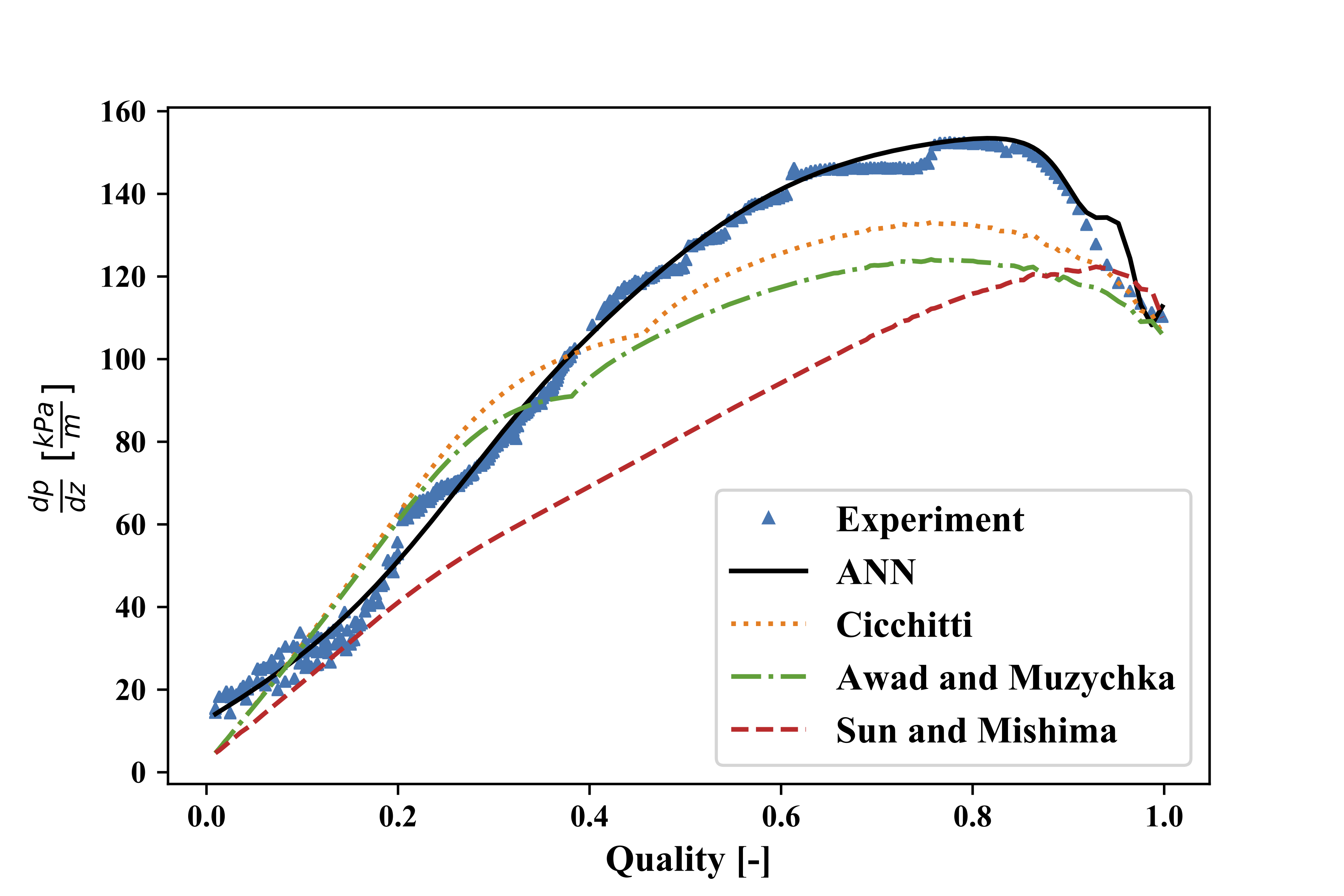

Correlated-Informed Neural Networks: A New Machine Learning Framework to Predict Pressure Drop in Micro-ChannelsAlejandro Montanez-Barrera, Juan Manuel Barroso-Maldonado, Andres Felipe Bedoya-Santacruz, and 1 more authorInternational Journal of Heat and Mass Transfer, 2022

Correlated-Informed Neural Networks: A New Machine Learning Framework to Predict Pressure Drop in Micro-ChannelsAlejandro Montanez-Barrera, Juan Manuel Barroso-Maldonado, Andres Felipe Bedoya-Santacruz, and 1 more authorInternational Journal of Heat and Mass Transfer, 2022Accurate pressure drop estimation in forced boiling phenomena is important during the thermal analysis and the geometric design of cryogenic heat exchangers. However, current methods to predict the pressure drop have one of two problems: lack of accuracy or generalization to different situations. In this work, we present the correlated-informed neural networks (CoINN), a new paradigm in applying the artificial neural network (ANN) technique combined with a successful pressure drop correlation as a mapping tool to predict the pressure drop of zeotropic mixtures in micro-channels. The proposed approach is inspired by Transfer Learning, highly used in deep learning problems with reduced datasets. Our method improves the ANN performance by transferring the knowledge of the Sun & Mishima correlation for the pressure drop to the ANN. The correlation having physical and phenomenological implications for the pressure drop in micro-channels considerably improves the performance and generalization capabilities of the ANN. The final architecture consists of three inputs: the mixture vapor quality, the micro-channel inner diameter, and the available pressure drop correlation. The results show the benefits gained using the correlated-informed approach predicting experimental data used for training and a posterior test with a mean relative error (mre) of 6%, lower than the Sun & Mishima correlation of 13%. Additionally, this approach can be extended to other mixtures and experimental settings, a missing feature in other approaches for mapping correlations using ANNs for heat transfer applications.

-

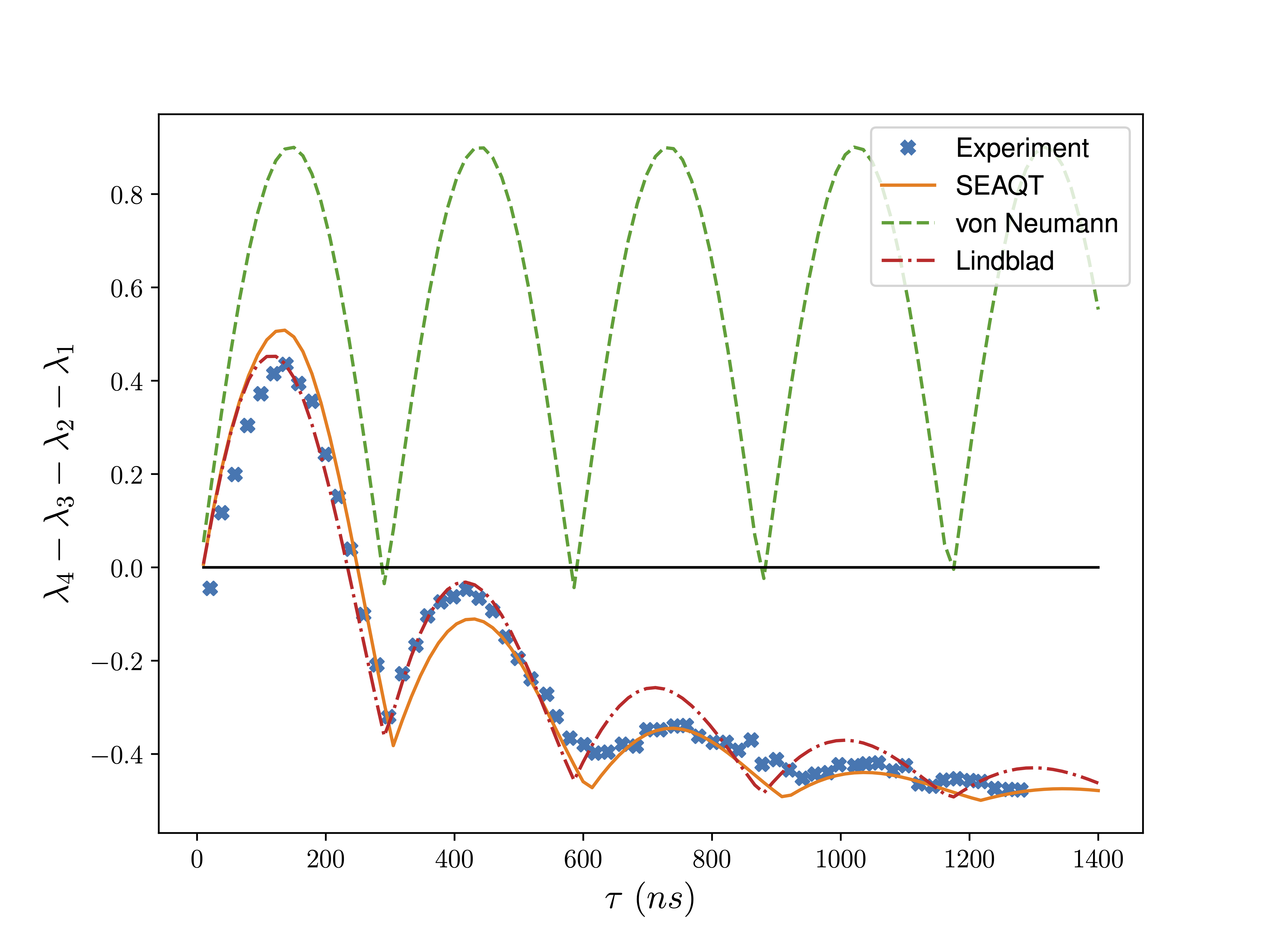

Decoherence predictions in a superconducting quantum processor using the steepest-entropy-ascent quantum thermodynamics frameworkAlejandro Montanez-Barrera, Michael R. Von Spakovsky, Cesar E. Damian Ascencio, and 1 more authorPhysical Review A, 2022

Decoherence predictions in a superconducting quantum processor using the steepest-entropy-ascent quantum thermodynamics frameworkAlejandro Montanez-Barrera, Michael R. Von Spakovsky, Cesar E. Damian Ascencio, and 1 more authorPhysical Review A, 2022The current stage of quantum computing technology, called noisy intermediate-scale quantum technology, is characterized by large errors that prohibit it from being used for real applications. In these devices, decoherence, one of the main sources of error, is generally modeled by Markovian master equations such as the Lindblad master equation. In this paper, the decoherence phenomena are addressed from the perspective of the steepest-entropy-ascent quantum thermodynamics framework in which the noise is in part seen as internal to the system. The framework is as well used to describe changes in the energy associated with environmental interactions. Three scenarios, an inversion recovery demonstration, a Ramsey demonstration, and a two-qubit entanglement-disentanglement demonstration, are used to demonstrate the applicability of this framework, which provides good results relative to the demonstrations and the Lindblad equation; it does so, however, from a different perspective as to the cause of the decoherence. These demonstrations are conducted on the IBM superconducting quantum device ibmq_bogota.

2020

-

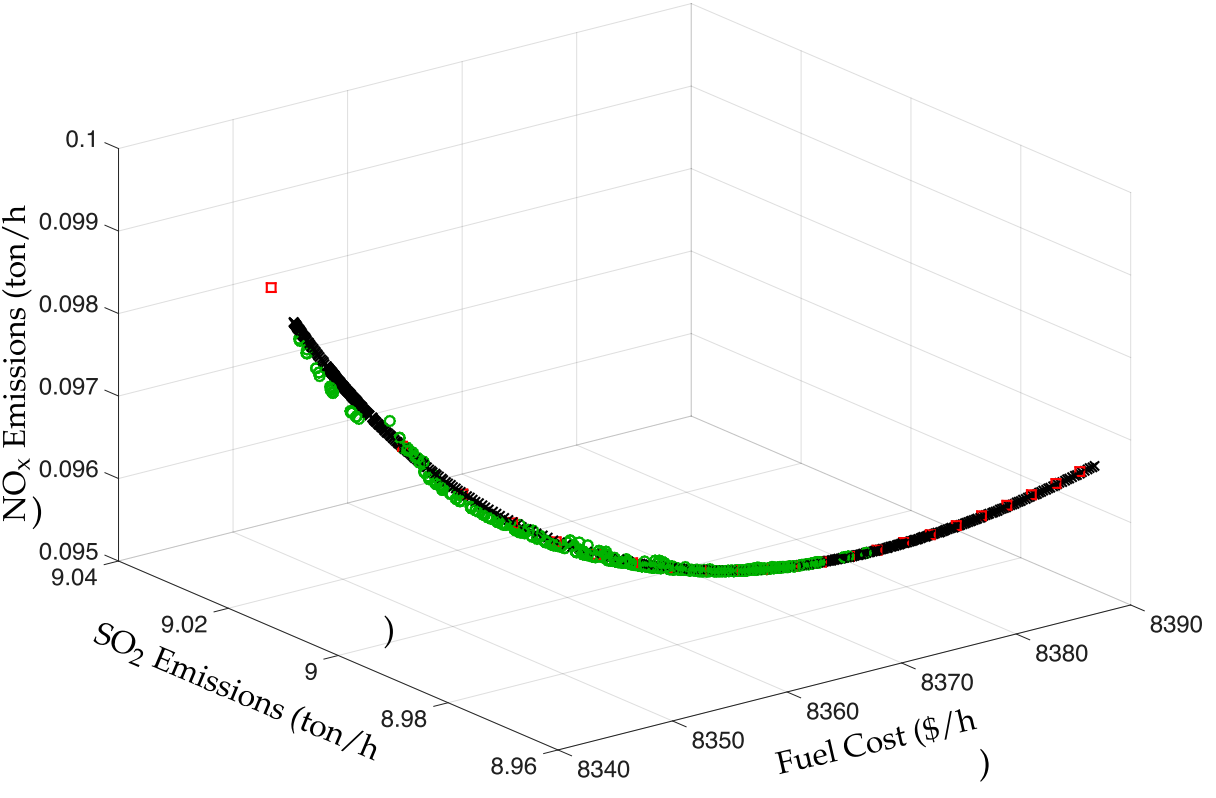

Solution to the Economic Emission Dispatch Problem Using Numerical Polynomial Homotopy ContinuationOracio I Barbosa-ayala, Alejandro Montanez-Barrera, Cesar E Damian-ascencio, and 1 more authorEnergies, 2020

Solution to the Economic Emission Dispatch Problem Using Numerical Polynomial Homotopy ContinuationOracio I Barbosa-ayala, Alejandro Montanez-Barrera, Cesar E Damian-ascencio, and 1 more authorEnergies, 2020 -

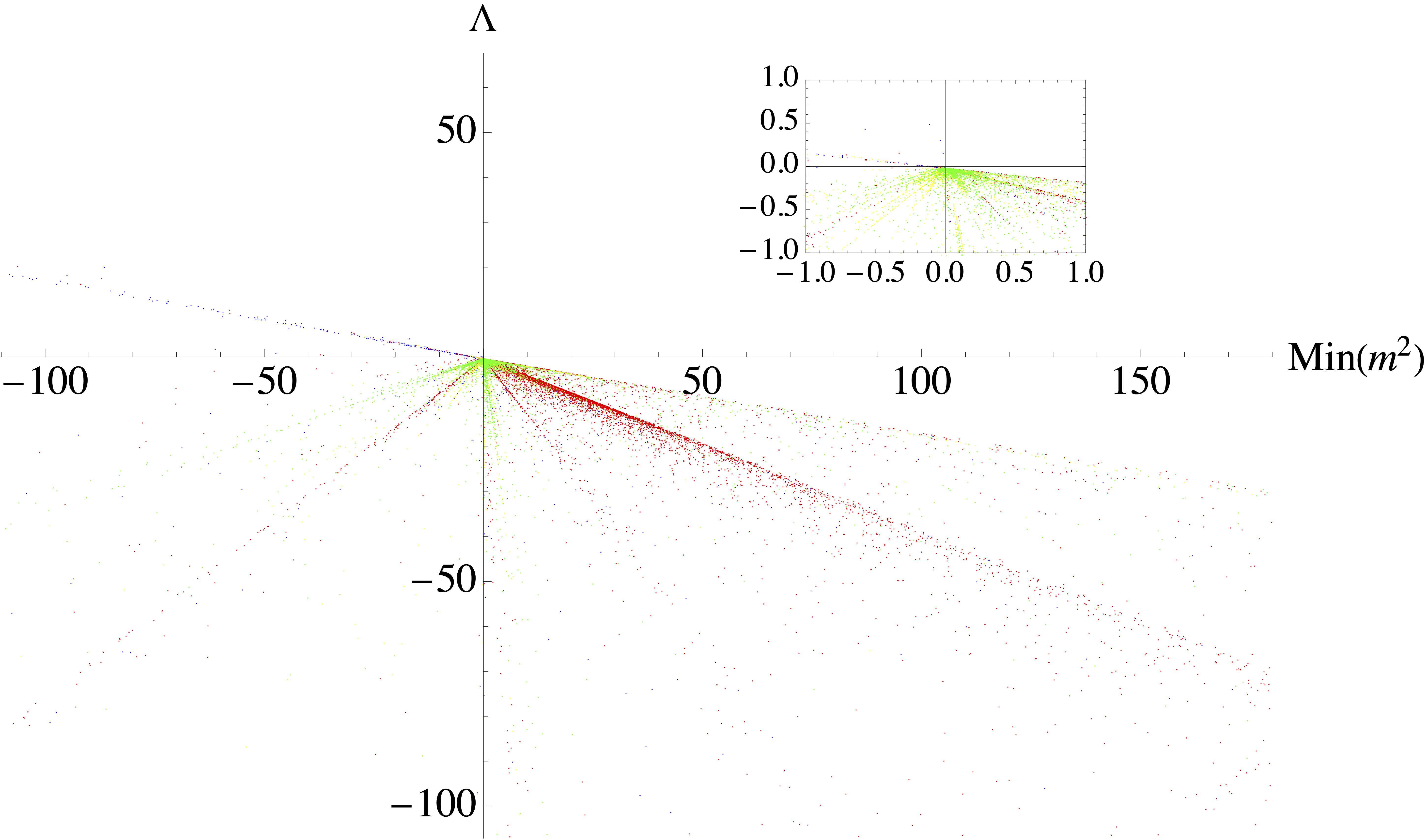

Testing Swampland Conjectures with Machine LearningNana Cabo Bizet, Cesar Damian, Oscar Loaiza-Brito, and 2 more authorsThe European Physical Journal C, 2020

Testing Swampland Conjectures with Machine LearningNana Cabo Bizet, Cesar Damian, Oscar Loaiza-Brito, and 2 more authorsThe European Physical Journal C, 2020We consider Type IIB compactifications on an isotropic torus T^6 threaded by geometric and non geometric fluxes. For this particular setup we apply supervised machine learning techniques, namely an artificial neural network coupled to a genetic algorithm, in order to obtain more than sixty thousand flux configurations yielding to a scalar potential with at least one critical point. We observe that both stable AdS vacua with large moduli masses and small vacuum energy as well as unstable dS vacua with small tachyonic mass and large energy are absent, in accordance to the Refined de Sitter Conjecture. Moreover, by considering a hierarchy among fluxes, we observe that perturbative solutions with small values for the vacuum energy and moduli masses are favored, as well as scenarios in which the lightest modulus mass is much greater than the corresponding AdS vacuum scale. Finally we apply some results on Random Matrix Theory to conclude that the most probable mass spectrum derived from this string setup is that satisfying the Refined de Sitter and AdS scale conjectures.

-

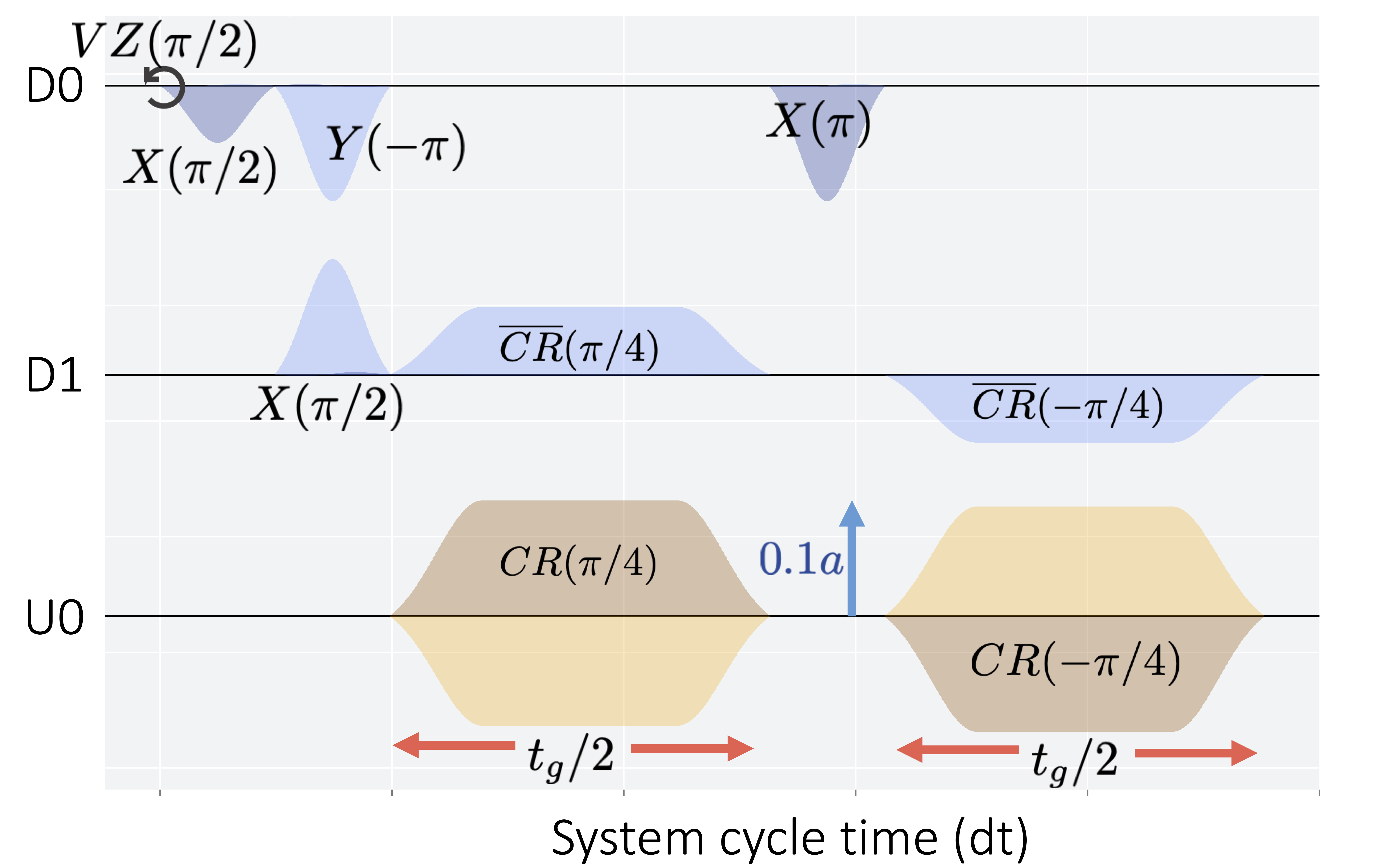

Loss-of-entanglement prediction of a controlled- PHASE gate in the framework of steepest-entropy-ascent quantum thermodynamicsAlejandro Montanez-Barrera, Cesar E Damian-ascencio, Michael R Von Spakovsky, and 1 more authorPhysical Review A, 2020

Loss-of-entanglement prediction of a controlled- PHASE gate in the framework of steepest-entropy-ascent quantum thermodynamicsAlejandro Montanez-Barrera, Cesar E Damian-ascencio, Michael R Von Spakovsky, and 1 more authorPhysical Review A, 2020As has been shown elsewhere, a reasonable model of the loss of entanglement or correlation that occurs in quantum computations is one which assumes that they can effectively be predicted by a framework that presupposes the presence of irreversibilities internal to the system. It is based on the steepest-entropy-ascent principle and is used here to reproduce the behavior of a controlled-PHASE gate in good agreement with experimental data. The results show that the loss of entanglement predicted is related to the irreversibilities in a nontrivial way, providing a possible alternative approach that warrants exploration to that conventionally used to predict the loss of entanglement. The results provide a means for understanding this loss in quantum protocols from a nonequilibrium thermodynamic standpoint. This framework permits the development of strategies for extending either the maximum fidelity of the computation or the entanglement time.

2019

-

Effects of producer and transmission reliability on the sustainability assessment of power system networksJose R. Vargas-Jaramillo, Alejandro Montanez-Barrera, Michael R. Spakovsky, and 2 more authorsEnergies, 2019

Effects of producer and transmission reliability on the sustainability assessment of power system networksJose R. Vargas-Jaramillo, Alejandro Montanez-Barrera, Michael R. Spakovsky, and 2 more authorsEnergies, 2019Details are presented of the development and incorporation of a generation and transmission reliability approach in an upper-level sustainability assessment framework for power system planning. This application represents a quasi-stationary, multiobjective optimization problem with nonlinear constraints, load uncertainties, stochastic effects for renewable energy producers, and the propagation of uncertainties along the transmission lines. The Expected Energy Not Supplied (EENS) accounts for generation and transmission reliability and is based on a probabilistic as opposed to deterministic approach. The optimization is developed for three scenarios. The first excludes uncertainties in the load demand, while the second includes them. The third scenario accounts not only for these uncertainties, but also for the stochastic effects related to wind and photovoltaic producers. The sustainability-reliability approach is applied to the standard IEEE Reliability Test System. Results show that using a Mixture of Normals Approximation (MONA) for the EENS formulation makes the reliability analysis simpler, as well as possible within a large-scale optimization. In addition, results show that the inclusion of renewable energy producers has some positive impact on the optimal synthesis/design of power networks under sustainability considerations. Also shown is the negative impact of renewable energy producers on the reliability of the power network.

-

ANN-based correlation for frictional pressure drop of non-azeotropic mixtures during cryogenic forced boilingJ. M. Barroso-Maldonado, Alejandro Montanez-Barrera, J. M. Belman-Flores, and 1 more authorApplied Thermal Engineering, 2019

ANN-based correlation for frictional pressure drop of non-azeotropic mixtures during cryogenic forced boilingJ. M. Barroso-Maldonado, Alejandro Montanez-Barrera, J. M. Belman-Flores, and 1 more authorApplied Thermal Engineering, 2019A crucial aspect of Joule-Thomson cryocooler analysis and optimization is the accurate estimation of frictional pressure drop. This paper presents a pressure drop model for boiling of non-azeotropic mixtures of nitrogen with hydrocarbons (e.g., methane, ethane, and propane) in microchannels. These refrigerant mixtures are important for their applicability in natural gas liquefaction plants. The pressure drop model is based on computational intelligence techniques, and its performance is evaluated with the mean relative error (mre), and compared with three correlations previously selected as most accurate: Awad and Muzychka; Sun and Mishima; and Cicchitti et al. Comparison between the proposed artificial neural network (ANN) model and the three correlations shows the advantages of the ANN to predict pressure drop for non-azeotropic mixtures. Existing correlations predict experimental data within mre = 23.9–25.3%, while the ANN has mre = 8.3%. Additional features of the ANN model include: (1) applicability to laminar, transitional and turbulent flow, and (2) demonstrated applicability to experiments not used in the training process. Therefore, the ANN model is recommended for predicting pressure drop due to accuracy and ease of applicability.